Powered by the Ampere architecture, the NVIDIA A800 40GB Active Graphics Card from PNY accelerates AI development, data analytics, and other high-performance computing (HPC) workloads when installed alongside a separately available NVIDIA RTX companion GPU in your workstation.

Equipped with 6912 CUDA cores and 432 third-generation Tensor cores, this graphics card can achieve up to 9.7 TFLOPS double-precision performance. It also features 40GB of HBM2 memory with 1.5 TB/s of memory bandwidth, which may be expanded to 80GB when a second A800 40GB Active card is installed with a 400 GB/s NVLink GPU-to-GPU connection to handle massive datasets. This graphics card occupies an available PCI Express 4.0 x16 slot and requires a compatible companion GPU for graphics output to monitors. A 3-year license for the NVIDIA AI Enterprise platform is included.

Amplified Performance When Paired with RTX

To support display functionality and deliver high-performance graphics for visual applications, the computing capabilities of NVIDIA A800 40GB Active are designed to be paired with NVIDIA RTX-accelerated GPUs. NVIDIA RTX 4000 Ada Generation, RTX A4000, and T1000 8GB GPUs are certified to run in tandem with A800 40GB Active, delivering powerful real-time ray tracing and AI-accelerated graphics performance in a single-slot form factor.

Third-Generation Tensor Cores

Third-Gen Tensor cores offer performance and versatility for a wide range of AI and HPC applications with support for double-precision (FP64) and Tensor Float 32 (TF32) precision provides up to 2X the performance and efficiency over the previous generation. Hardware support for structural sparsity doubles the throughput for inferencing.

Ultrafast HBM2 Memory

This GPU delivers massive computational throughput with 40GB of high-speed HBM2 memory with 1.5 TB/s of memory bandwidth, which is an over 70% increase compared to previous generation. There is also significantly more on-chip memory, including a 40MB level 2 cache to accelerate the most computationally intense AI and HPC workloads.

NVIDIA AI Enterprise Platform

The NVIDIA AI Enterprise software platform accelerates and simplifies deploying AI at scale, allowing organizations to develop once and deploy anywhere. Coupling this powerful software platform with the A800 40GB Active GPU enables AI developers to build, iterate, and refine AI models on workstations using included frameworks, simplifying the scaling process and reserving costly data center computing resources for more expensive, large-scale computations.

Power Generative AI

Using neural networks to identify patterns and structures within existing data, generative AI applications enable users to generate new and original content from a wide variety of inputs and outputs, including images, sounds, animation, and 3D models. Leverage the NVIDIA generative AI solution, NeMo Framework, included in NVIDIA AI Enterprise along with NVIDIA A800 40GB Active GPU for easy, fast, and customizable generative AI model development.

Inference

Inference is where AI delivers results, providing actionable insights by operationalizing trained models. With 432 third-generation Tensor cores and 6,912 CUDA cores, the A800 40GB Active delivers twice the inference operation performance versus the previous generation with support for structural sparsity and a broad range of precisions, including TF32, INT8, and FP64. AI developers can use NVIDIA inference software including NVIDIA TensorRT and NVIDIA Triton Inference Server that are part of NVIDIA AI Enterprise to simplify and optimize the deployment of AI models at scale.

High-Performance Computing

The A800 40GB Active GPU delivers incredible performance for GPU-accelerated computer-aided engineering (CAE) applications. Engineering and product development professionals can run large-scale simulations for finite element analysis (FEA), computational fluid dynamics (CFD), construction engineering management (CEM), and other engineering analysis codes in full FP64 precision with incredible speed, shortening development timelines and accelerating time to value. With the addition of RTX-accelerated GPUs providing display capabilities, scientists and engineers can visualize large-scale simulations and models in full design fidelity.

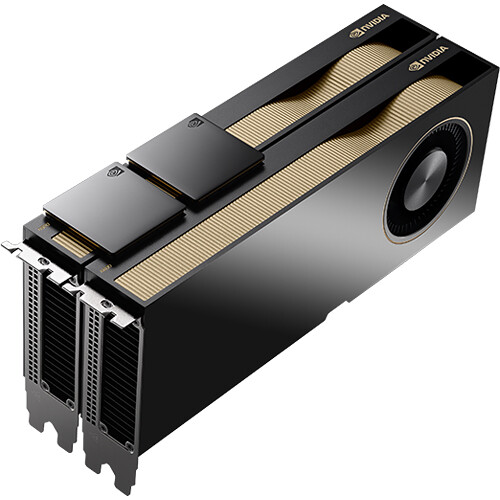

Third-Generation NVIDIA NVLink

Increased GPU-to-GPU interconnect bandwidth provides a single scalable memory to accelerate compute workloads and tackle larger datasets. Connect a pair of NVIDIA A800 40GB Active GPUs with NVIDIA NVLink to increase the effective memory footprint to 80GB and scale application performance by enabling bidirectional GPU-to-GPU data transfers at rates up to 400 GB/s.

EAN: 751492779430

PNY NVIDIA A800 Specs

Key Specs

| GPU Model | NVIDIA A800 Active |

| Stream Processors | 6912 CUDA Cores 432 Tensor Cores |

| Interface | PCI Express 4.0 x16 |

| GPU Memory | 40 GB |

| Memory Interface | HBM2 |

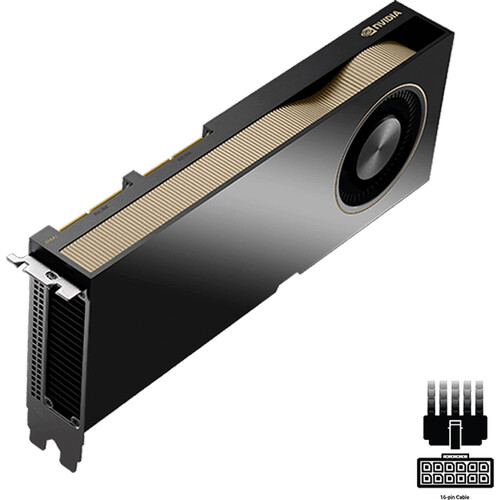

| Power Connection | 1x 16-Pin PCI Connector |

| GPU Width | Dual-Slot |

GPU

| GPU Model | NVIDIA A800 Active |

| Stream Processors | 6912 CUDA Cores 432 Tensor Cores |

| Floating Point Performance | Double Precision Performance: 9.7 TFLOPS Single Precision Performance: 19.5 TFLOPS |

| Interface | PCI Express 4.0 x16 |

| OS Compatibility | Windows 10 to 11 Ubuntu 18.4 or Later Linux UNIX |

Memory

| GPU Memory | 40 GB |

| Memory Interface | HBM2 |

| Memory Interface Width | 5120-Bit |

| Memory Bandwidth | 1555.2 GB/s |

| SSG Storage | No |

Power Requirements

| Max Power Consumption | 240 W |

| Power Connection | 1x 16-Pin PCI Connector |

Dimensions

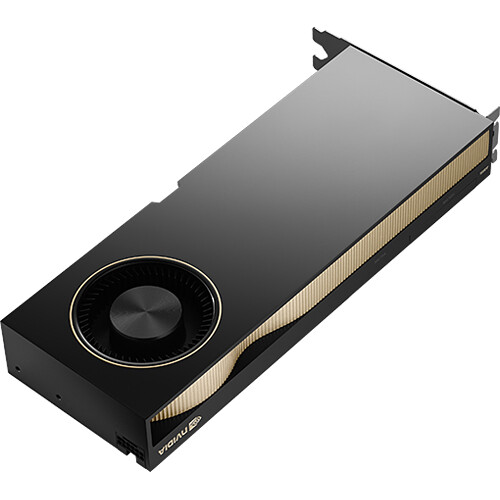

| Expansion Slot Compatibility | Full Height |

| Height | 4.4" / 111.8 mm |

| Length | 10.5" / 266.7 mm |

| GPU Width | Dual-Slot |

General

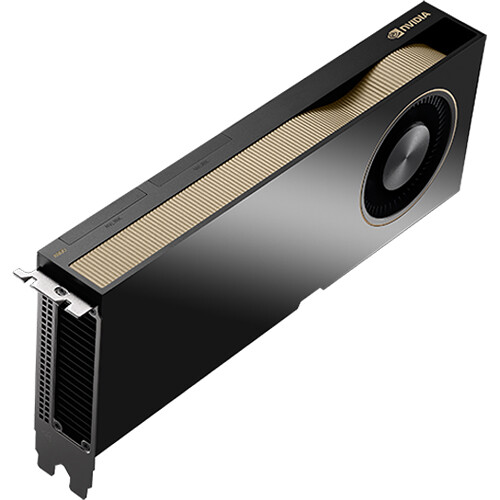

| Cooler Type | Blower-Style Fan |

In the Box

- PNY NVIDIA A800 40GB Active Graphics Card

- CEM5 16-Pin to Dual 8-Pin PCIe Auxiliary Power Cable

- 3-Year Manufacturer Warranty

No posts found